The Superworker Sampling Bias on Mechanical Turk

By Aaron Moss, PhD & Leib Litman, PhD

When requesters post tasks on Mechanical Turk (MTurk), workers complete those tasks on a first come, first-served basis. This method of task distribution has important implications for research including the potential to introduce a form of sampling bias referred to as the superworker problem.

In this blog, we outline the scope of the superworker problem on MTurk and what researchers can do to mitigate it.

How Do “Professional” Survey Respondents Affect Sampling on MTurk?

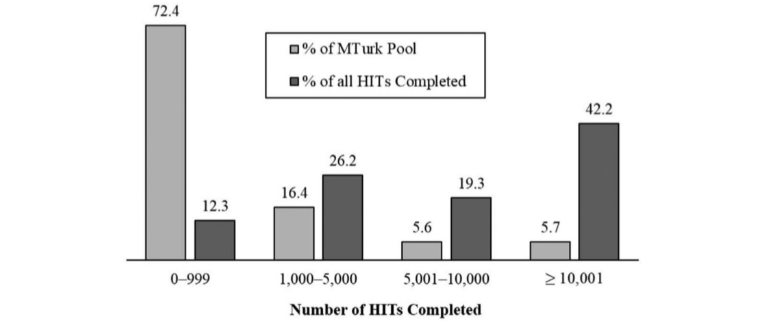

Superworkers on Mechanical Turk are people who by virtue of being very active on the platform complete more tasks than their share of the population. For example, previous research has suggested that 20% of workers may complete 80% of all tasks. In a study we conducted, however, we found the problem is more severe. As Figure 1 shows, the most active 5.7% of workers complete about 40% of all research HITs.

Figure 1. Percent of MTurk workers who fall into each experience group and the share of HITs completed by each group

Superworkers pose two potential problems for behavioral researchers using MTurk. First, superworkers are often familiar with common research measures and manipulations (referred to as non-naivete) because they have completed many tasks. Participants who are familiar with study measures may change their behavior or response patterns, potentially lowering effect sizes (Chandler et al., 2015).

Second, superworkers are often young and male, meaning a study that samples several superworkers may be skewed demographically.

Which Tasks Are Most Affected by Superworkers?

There are several reasons why superworkers complete more than their share of tasks on MTurk. Understanding these factors can help researchers decide when superworkers are a problem for their study and how to mitigate sampling bias.

First, as reported in this paper, requesters often employ worker qualifications that give superworkers an advantage when searching for tasks. By making the most inexperienced workers ineligible, commonly used worker qualifications limit competition among workers searching for tasks.

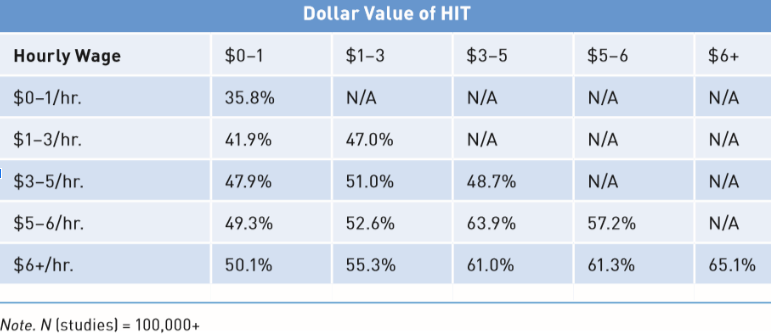

Second, superworkers are more likely than others on MTurk to rely on tools and scripts that help them quickly find and complete tasks. One of the most important pieces of information superworkers use to select tasks is the pay rate. As shown in Table 1, as the pay rate for a study increases, so too does the number of superworkers in the study’s sample.

Table 1. The percentage of superworkers in study samples based on the compensation offered for each HIT and the HIT pay rate.

How Can Researchers Limit the Number of Non-Naive Respondents in a Survey?

One way to limit superworkers is to invert how worker qualifications are currently used. Rather than using qualifications to exclude workers with a low approval rating and low number of HITs, researchers could use qualifications to either exclude workers with many HITs completed (e.g., 5,000) or to limit their participation by stratifying the sample. Stratifying a sample by opening a portion of a study’s participation slots to workers with different levels of experience may be preferable to excluding superworkers outright because it will ensure data collection does not slow too much. The trade-offs of different sampling strategies are discussed in detail in Robinson, Rosenzweig, Moss, & Litman, 2019.

To learn more about other sampling issues on Mechanical Turk—including time of day biases, participant dropout, participant turnover—and the best way to deal with them, check out the newest book in SAGE’s Innovation in Methods Series Conducting Online Research on Amazon Mechanical Turk and Beyond.

References

Chandler, J., Paolacci, G., Peer, E., Mueller, P., & Ratliff, K. A. (2015). Using nonnaive participants can reduce effect sizes. Psychological science, 26(7), 1131-1139.

Robinson, J., Rosenzweig, C., Moss, A. J., & Litman, L. (2019). Tapped out or barely tapped? Recommendations for how to harness the vast and largely unused potential of the Mechanical Turk participant pool. PloS one, 14(12).