In 1945, Admiral William D. Leahy, President Truman’s Chief of Staff and one of the nation’s foremost explosives experts, made a prediction about the atomic bomb: “That is the biggest fool thing we have ever done. The bomb will never go off, and I speak as an expert in explosives.”

History proved him catastrophically wrong.

Here’s what this tells us: expertise in the present doesn’t guarantee vision for the future. The most dangerous blind spots often come from those who know the current system best because mastery of today can make tomorrow’s disruptions invisible.

At CloudResearch, we’ve built our entire security philosophy around a different principle: operate before the threat arrives.

What It Means to Be an “Early Thinker”

Most companies position themselves somewhere on the classic technology adoption curve: innovators, early adopters, early majority, late majority, or laggards. That framework assumes the innovation already exists and the question is merely when to adopt it.

We reject that framing entirely.

CloudResearch doesn’t wait for innovations to appear on the adoption curve. We position ourselves before the curve even begins, working to understand and prepare for threats and opportunities that don’t yet exist in the mainstream consciousness. While others are still evaluating whether a new technology matters, we’re already stress-testing our defenses against it.

This is about recognizing a fundamental truth. In data quality and security, being reactive means being too late.

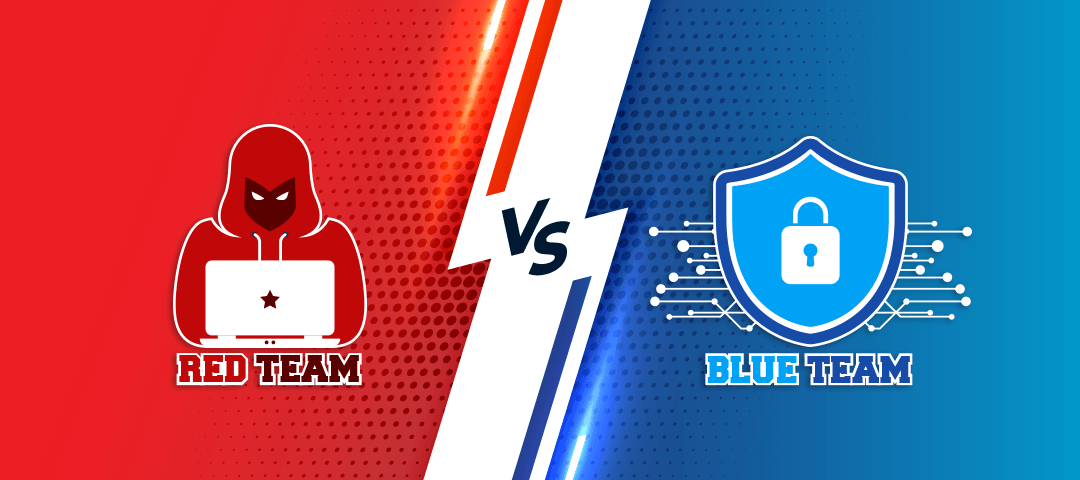

The Red Team / Blue Team Philosophy

Most companies approach security by building walls and hoping they hold. We take a different approach.

CloudResearch operates two parallel teams with opposing missions:

The Red Team’s job is to break us. They develop sophisticated attacks against our own platforms, using cutting-edge tools and techniques that don’t yet exist in the wild. They build AI agents more sophisticated than anything commercially available. They simulate fraud patterns we haven’t seen yet. They think like attackers who are six months or two years ahead of current threats.

The Blue Team’s job is to defend against everything the Red Team discovers. Every successful Red Team attack becomes a Blue Team defense project. Every vulnerability they expose gets patched. Every new fraud pattern they develop gets added to our detection systems.

This creates a continuous adversarial cycle. Our Red Team doesn’t just test whether our defenses work today. They test whether our defenses will work against tomorrow’s threats.

The result: CloudResearch is often defending against attacks that haven’t happened yet, using countermeasures for techniques that don’t exist in the wild.

This approach requires investment. It means dedicating engineering resources to problems that don’t have a current ROI. It means sometimes appearing to “over-engineer” solutions to threats that others don’t yet recognize.

But it’s the only way to stay ahead.

Data Quality as Our North Star

There’s a reason we invest so heavily in this proactive approach: data quality isn’t a feature for us. It’s our entire value proposition.

Researchers come to CloudResearch because they need to trust their data. A single survey contaminated by fraud can invalidate months of work. A poll corrupted by bots can mislead policymakers. Bad data doesn’t just waste money; it produces false insights that lead to wrong decisions.

We take that responsibility seriously. Every system we build, every protocol we establish, every investment we make in security ultimately serves one goal: ensuring that when researchers use CloudResearch, they’re getting authentic human insights, not noise.

Why This Matters Right Now

Last week, as I was finalizing this piece, two major developments validated everything we’ve been building toward. First, a Dartmouth researcher published findings in the Proceedings of the National Academy of Sciences demonstrating that AI agents can complete surveys with 99.8% accuracy on attention checks, rendering most current detection methods obsolete. Second, Harvard Business Review reported that generative AI will disrupt the $140 billion market research industry in 2026, with venture-backed companies openly marketing “synthetic respondents” as replacements for human panels.

The threat we anticipated years ago just became undeniably real.

While the academic world scrambles to understand the problem and commercial platforms rush to deploy AI replacements for human respondents, we’ve spent years building defenses. Our Red Team already uses sophisticated AI agents to attack our own systems. Our Blue Team has developed countermeasures for threats that don’t yet exist in the wild.

That’s what early thinking looks like in practice. That’s what it means to operate before the adoption curve even begins.

Want to see the evidence? Read our analysis of the AI agent threat, what we’re actually detecting (and not detecting), and why Human Intelligence matters: The AI Bot Threat to Survey Research: Why We’re Not Seeing It (Yet)